The Linked Data Challenge

A week ago I issued a challenge to Linked Data developers – using a Linked Data service, such as DBpedia, tell me which town or city in the UK has the largest proportion of students. I’m pleased to say that a developer, Alejandra Garcia Rojas, has responded to my challenge and provided an answer. But this post isn’t about the answer but rather the development processes, the limitations of the approach and the issues which the challenge has raised. The post concludes with a revised challenge for Linked Data (and other) developers.

The Motivation For The Challenge

Before revealing the answer I should explain why I posed this challenge. I can recall Tim Berners-Lee introducing the Semantic Web at a WWW conference many years ago – looking at my trip reports it was the WWW 7 conference held in Brisbane in 1998. My report links to the slides Tim Berners-Lee used in his presentation in which he encouraged the Web development community to engage with the Semantic Web. I was particularly interested in his slide in which he outlined some of the user problems which the Semantic Web would address:

- Can Joe access the party photos?

- Who are all the people who can?

- Is there a green car for sale for around $15000 in Queensland?

- Did someone driving a blue car send us an invoice for over $10000?

- What was the average temperature in 1997 in Brisbane?

- Please fill in my tax form!

I was interested if 12 years on, such types of questions can be answered using what is now referred to as Linked Data. And as we have a large resource, DBpedia, which provides Linked Data for use by developers I felt it would be useful to use an existing resource (which is based on the structured content held in Wikipedia) to experiment with. I was particularly interested in the following three questions:

- How easy would it be for an experienced Linked Data developer to write code which would provide an answer? Would it be 10 lines of code which could be written in 10 minutes, a million lines of code which would take a large team years to write or somewhere in between?

- Is the Linked Data content held in DBpedia of sufficient consistency and quality to allow an answer to be provided within the need for intensive data cleansing?

- What additional issues might the experiences gained in this challenge raise?

A SPARQL Query To Answer My Challenge

In addition to issuing my challenge on this blog and using Twitter to engage with a wider community I also raised the challenge in the Linked Data Web group in LinkedIn (note you need to be a member of the group to view the discussion). It was in this group that the discussion started, with Kingsley Idehen (CEO at OpenLink Software) clarifying some of the issues I’d raised. Alejandra Garcia Rojas, a Semantic Web Specialist was the developer who immediately responded to my query and, within a few hours, provided an initial summary and a few days later gave me the final version of her SPARQL query (as described in Wikipedia SPARQL is a query language for Linked Data) which was used to provide an answer to my question. Alejandra explained that it should be possible to use the following single SPARQL query to provide an answer from the data held in DBpedia:

prefix dbpedia-owl:

prefix dbpedia-owl-uni:

prefix dbpedia-owl-inst:

select ?town count(?uni) ?pgrad ?ugrad max(?population) (( (?pgrad+?ugrad)/ max(?population))*100) as ?percentage where {

?uni dbpedia-owl-inst:country dbpedia:United_Kingdom ;

dbpedia-owl-uni:postgrad ?pgrad ;

dbpedia-owl-uni:undergrad ?ugrad ;

dbpedia-owl-inst:city ?town.

optional {?town dbpedia-owl:populationTotal ?population . FILTER (?population > 0 ) }

}

group by ?town ?pgrad ?ugrad having( (((?pgrad+?ugrad)/ max(?population) )*100) > 0)

order by desc 6

The Answer To My Challenge

What’s the answer, I hear you asking? The answer, to my slightly modified query in which I’ve asked for the number of universities and the total population for the six towns with the highest proportion of students, is given in the table below:

| City |

Nos. of

Universities |

Student

Nos. |

Population |

Student

Proportion |

| Cambridge |

2 |

38,696 |

12 |

3224% |

| Leeds |

5 |

135,625 |

89 |

1523% |

| Preston |

1 |

34,863 |

30 |

1162% |

| Oxford |

3 |

40,248 |

38 |

1059% |

| Leicester |

3 |

54,262 |

62 |

875% |

As can be seen, Cambridge, which has two universities, has the highest proportion of student, with a total student population of 38,696 students and an overall population of 12 people. Clearly something is wrong :-) And as Alejandra has provided a live link to her SPARQL query so you can examine the full responses for yourself. In addition another SPARQL query provides a list of cities and their universities.

The Quality Of The Data Is The Problem

I was very pleased to discover that it was possible to write a brief and simple SPARQL query (anyone with knowledge of SQL will be able to understand the code). The problem lies with the data. And this exercise has been useful in gaining a better understanding of the flaws in the data and of the need to understand why such problems have occurred.

Following discussions with Alejandra we identified the following problems with the underlying data:

- The population of the towns and cities defined in a variety of ways. We discovered many different variables describing the population: totalPopulation, urbanPopulation, populationEstimate, etc. – and on occasions there was more than one value in the variables. Moreover, these variables are not always in all cities’ descriptions, thus making it impossible to select the most appropriate value.

- A full list of all UK universities has not been analysed because the query processes the universities that have the student numbers defined. If the university does not provide the number of students, then it is discarded.

- Colleges are sometimes defined as universities.

What Have We Learnt?

What have we learnt from this exercise? We have learnt that although the necessary information to answer my query may be held in DBpedia, it is not available in a format which is suitable for automated processing.

I have also learnt that a SPARQL query need not be intimidating and it would appear that writing SPARQL queries need not necessarily be time-consuming, if you have the necessary expertise.

The bad news, though, is that although DBpedia appears to be fairly central to the current publicity surrounding Linked Data, it does not appear to be capable of providing end user services on the basis of this initial experiment.

The bad news, though, is that although DBpedia appears to be fairly central to the current publicity surrounding Linked Data, it does not appear to be capable of providing end user services on the basis of this initial experiment.

I do not know, though, what the underlying problems with the data are. It could be due to the complexity of the data modelling, the inherent limitations of the distributed data collection approach used by Wikipedia, limitations of the workflow process in taking data from Wikipedia for use in DBpedia – or simply that the apparent simple query “which place in the UK has the higher per capita student population” does have many implicit assumptions which can’t be handled by the DBpedia’s representation of the data stored in Wikipedia.

If the answer to such apparently simple queries will require much more complex data modelling, there will be a need to address the business models which will be needed to justify additional expenditure needed to handle the complexity. And although there might be valid business reasons for doing this in areas such as biomedial data, it may be questionable whether this is the case for answering essential trivial questions such as the one I posed. In which case the similarly trivial question which Tim Berners-Lee used back in 1998 – “Is there a green car for sale for around $15000 in Queensland?” – was perhaps responsible for misleading people into thinking the Semantic Web was for ordinary end users. I am now starting to wonder whether a better strategy for those involved in Linked Data activities would be to purposely distance it from typical end users and target, instead, identified niche areas.

A more general concern which this exercise has alerted me to is the dangers of assuming that the answer to a Linked Data query will necessarily be correct. In this case it was clear that the results were wrong. But what if the results had only been slightly wrong? And what if you weren’t in a position to make a judgement on the validity of the answers?

On the LinkedIn discussion Chris Rusbridge summarised his particular concerns: “My question is really about the application of tools without careful thought on their implications, which seems to me a risk for Linked Data in particular“. Chris went on to ask “what are the risks of running queries against datasets where there are data of unknown provenance and qualification?”

My simple query has resulted in me asking many questions which hadn’t occurred to me previously. I welcome comments from others with an interest in Linked Data.

An Updated Challenge For Linked Data (and Other) Developers

It would be a mistake to regard the failure to obtain an answer to my challenge as an indication of limitations of the Linked Data concept – the phrase ‘garbage is, garbage out‘ is as valid in a Linked Data world as it was when it was coined in the days of IBM mainframe computers.’

An updated challenge for Linked Data developers would be to answer the query “What are the top five places in the UK with the highest proportion of students?” The answer should list the town or city, student percentage, together with the numbers of universities, students and the overall population.

And rather than using DBpedia the official source of such data would be a better starting point. The UK government has published entry points to perform SPARQL queries for a variety of statistical queries – so Linked Data developers may wish to use the interface for government statistics and educational statistics.

Of course it might be possible to provide an answer to my query using approaches other than linked data. In a post entitled “Mulling Over =datagovukLookup() in Google Spreadsheets” Tony Hirst asked “do I win if I can work out a solution using stuff from the Guardian Datastore and Google Spreadsheets, or are you only accepting Proper Linked Data solutions“. Although I’m afraid there’s no prize, I would be interested in seeing if an answer to my query can be provided using other approaches.

Twitter conversation from Topsy: [View]

51.379915

-2.331708

The buzz at the conference clearly focussed on the Semantic Web. The conference’s opening keynote was delivered by Dame Wendy Hall and Professor Nigel Shadbolt, both highly regarded researchers at the School of Electronics and Computer Science (ECS) at the University of Southampton whose long standing and influential involvement which dates back to the early days of the Web continues to the present, as can be seen from the

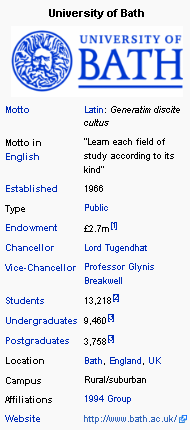

The buzz at the conference clearly focussed on the Semantic Web. The conference’s opening keynote was delivered by Dame Wendy Hall and Professor Nigel Shadbolt, both highly regarded researchers at the School of Electronics and Computer Science (ECS) at the University of Southampton whose long standing and influential involvement which dates back to the early days of the Web continues to the present, as can be seen from the  I was told that DBpedia provides access to structured text boxes in Wikipedia entries, such as the factual entries for Universities (as illustrated).

I was told that DBpedia provides access to structured text boxes in Wikipedia entries, such as the factual entries for Universities (as illustrated).

You must be logged in to post a comment.